How to build a LLM powered carbon footprint analysis app

Learn how to harness the power of the new large language models to build an app to analyze carbon footprints.

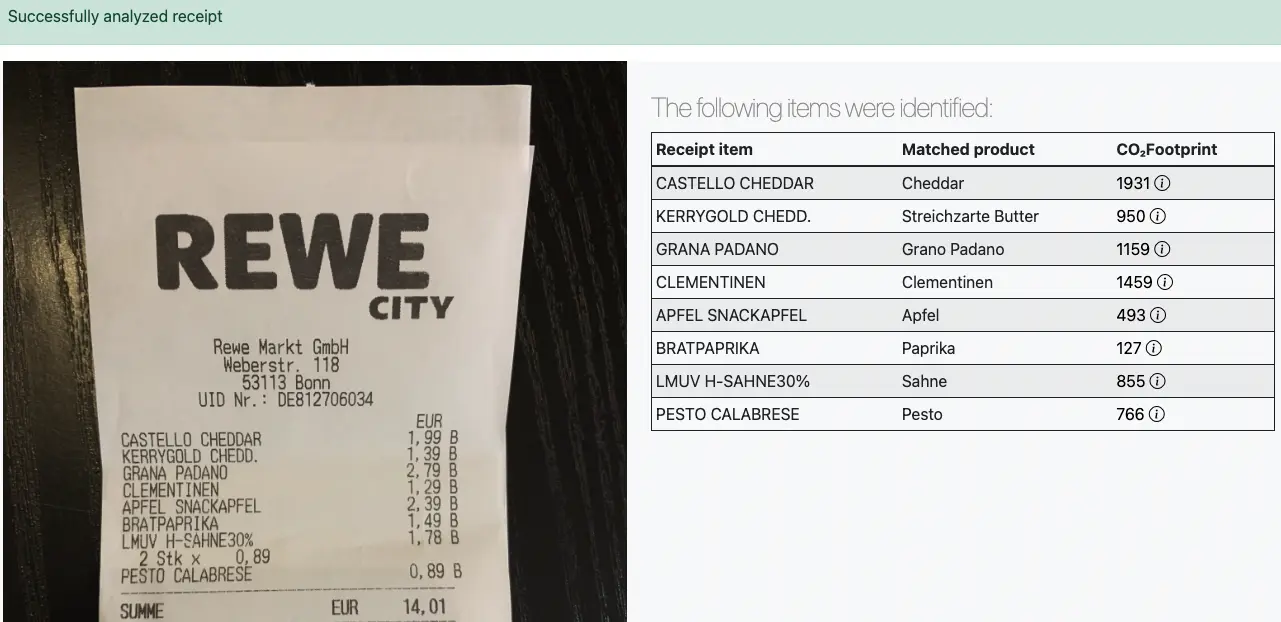

In this post I am going to showcase how you can build an app that analyzes the carbon footprint of your grocery runs using the latest advances in large language models. We will be using the aleph alpha LLM API combined with Microsofts Azure form recognizer in Python to build something truly amazing.

The app behind todays post is bigger than usual and initially took months of fine tuning and work - it was the center piece of my previous startup Project Count. We tried to develop a convenient way to track how your daily actions influence your personal carbon footprint. Since we stopped development of that project I'm using this post as an opportunity to open-source our code and show more people how you can use the advances in AI to make a positive impact.

The app is currently available for testing here: https://40406-3000.2.codesphere.com/ (Subject to availability of my free API credits :D)

The entire code discussed here today alongside some example receipts can be found on GitHub: https://github.com/codesphere-cloud/large-language-example-app

If you want to follow along check it out locally or on Codesphere and grab yourself the two API keys required. Both of them allow you to get started for free!

How you can get it running

Clone the repository locally or in codesphere via: https://codesphere.com/https://github.com/codesphere-cloud/large-language-example-app

- Run

pipenv install - Get an API key for the large language model API from Aleph Alpha (free credits provided upon signup) https://app.aleph-alpha.com/signup

- Sign up to Microsoft Azure and create an API key for the form recognizer (12 months free + free plan with rate limits afterwards) https://azure.microsoft.com/en-us/free/ai/

- Set

ALEPH_KEYenv variable - Set

AZURE_FORM_ENDPOINTenv variable - Set

AZURE_FORM_KEYenv variable - Run

pipenv run python run.py - Open localhost on port 3000

How it's built part 1 - components / frameworks

| Framework | What it does |

|---|---|

| Pyhton (Flask) | Puts everything together, runs calculations & server |

| Aleph Alpha | Semantic embeddings for similarity via LLM API |

| Microsoft Form recognizer | OCR service to turn pictures of receipts into usable structured data |

| Jinja | Frontend template engine, generates the HTML served to users |

| Bootstrap | CSS utility functions for styling the frontend |

Basically we are using Python, more specifically Flask to put it all together and do some data manipulation. The actual AI stuff is pulled from two third party providers, Microsoft for the form recognizer OCR (because it's outstanding & free to start) and Aleph Alpha to create semantic embeddings based on their large language model. We could easily switch that to use OpenAi GPT-4 API for this as well - the main reason we use Aleph Alpha here is because the initial version of this was built before the chatGPT release and OpenAi's pricing did not make it that easy to get started then. I'd love to see a performance comparison between the two here and I welcome anyone to create a fork of the repo to try this themselves - let me know!

There are lot of great tutorials out there on how to set up a flask app in general, I will focus on the specific receipt analysis part which I basically just embedded into a single route flask app based on the standard template.

How it's built part 2 - analysis.py

From the front end route we send the image bytes submitted via a file upload form.

The first function is called azure_form_recognition, it takes the image runs it against Microsofts OCR called form recognizer - it has a pre-build model to recognize receipts.

We iterate over the result item and grab fields like product description, quantity, total and the the store if available. Also I added some data manipulation to recognize if a quantity is an integer or a float with , or . which happens for weighted items like loose fruit and vegetables. It's not 100% correct for all types of receipts but this was tested and optimized based on real user data (>100 German receipts).

def azure_form_recognition(image_input):

document = image_input

document_analysis_client = DocumentAnalysisClient(

endpoint=endpoint, credential=AzureKeyCredential(key)

)

poller = document_analysis_client.begin_analyze_document("prebuilt-receipt", document)

receipts = poller.result()

for idx, receipt in enumerate(receipts.documents):

if receipt.fields.get("MerchantName"):

store = receipt.fields.get("MerchantName").value

else:

store="Unknown store"

if receipt.fields.get("Items"):

d = []

for idx, item in enumerate(receipt.fields.get("Items").value):

item_name = item.value.get("Description")

if item_name:

d.append( {

"description": item_name.value,

"quantity" : [float(re.findall("[-+]?[.]?[\d]+(?:,\d\d\d)*[\.]?\d*(?:[eE][-+]?\d+)?", item.value.get("Quantity").content)[0].replace(",",".")) if item.value.get("Quantity") and item.value.get("Quantity").value !=None else 1][0],

"total" : [item.value.get("TotalPrice").value if item.value.get("TotalPrice") else 1][0]

}

)

grocery_input = pd.DataFrame(d)

return grocery_input, store

The output dataframe is now passed to a function called match_and_merge_combined which takes the structured OCR result data, the excel based mappings for fuzzy string matching and the semantic embedding dictionary which we obtained by running a semantic LLM based embedding algorithm over the entire dataset (more on this later).

The mapping dataset with the carbon footprints is our own - over 6 month of research went into it. We combined publicly available data like this with other datasets and field studies on typical package sizes in German supermarkets.

def match_and_merge_combined(df1: pd.DataFrame, df2: pd.DataFrame, col1: str, col2: str, embedding_dict, cutoff: int = 80, cutoff_ai: int = 80, language: str = 'de'):

# adding empty row

df2 = df2.reindex(list(range(0, len(df2)+1))).reset_index(drop=True)

index_of_empty = len(df2) - 1

# Context - provides the context for our large language models

if language == 'de': phrase='Auf dem Kassenzettel steht: '

else: phrase='The grocery item is: '

# First attempt a fuzzy string based match = faster & cheaper than semantic match

indexed_strings_dict = dict(enumerate(df2[col2]))

matched_indices = set()

ordered_indices = []

scores = []

for s1 in df1[col1]:

match = fuzzy_process.extractOne(

query=s1,

choices=indexed_strings_dict,

score_cutoff=cutoff

)

# If match below cutoff fetch semantic match

score, index = match[1:] if match is not None else find_match_semantic(embedding_dict,phrase+s1)[1:]

if score < cutoff_ai:

index = index_of_empty

matched_indices.add(index)

ordered_indices.append(index)

scores.append(score)The mapping is called combined because we first run a fuzzy string based matching algorithm with a cutoff threshold - this is faster and computationally cheaper than running the LLM based semantic mapping and takes care of easy matches like bananas -> banana only if there is no match within the defined cutoff we call the find_match_semantic function.

This function takes the current item, creates a semantic embedding via Aleph Alphas API and compares it with all semantic embedding in the dictionary.

An embedding is basically a mathematical multi plane representation of what the LLM understands about that item and context. We can then calculate the cosine distance between embeddings and pick the embedding with the lowest distance. Large Language models can place similar items (within the given context of grocery receipt items) closer to each other allowing matches like tenderloin steak -> beef filet and creates remarkably accurate results based on our German receipts. From the chosen embedding we reverse engineer what item that embedding belongs to based on the index and therefore get the closest semantic match for each item.

def find_match_semantic(embeddings, product_description: str):

embeddings_to_add = []

response = requests.post(

"https://api.aleph-alpha.com/semantic_embed",

headers={

"Authorization": f"Bearer {API_KEY}",

"User-Agent": "Aleph-Alpha-Python-Client-1.4.2",

},

json={

"model": model,

"prompt": product_description,

"representation": "symmetric",

"compress_to_size": 128,

},

)

result = response.json()

embeddings_to_add.append(result["embedding"])

cosine_similarities = {}

for item in embeddings:

cosine_similarities[item] = 1 - cosine(embeddings_to_add[0], embeddings[item])

result = (max(cosine_similarities, key=cosine_similarities.get),max(cosine_similarities.values())*100,list(cosine_similarities.keys()).index(max(cosine_similarities, key=cosine_similarities.get)))

return resultFrom there we do a bunch a data clean-up, remove NaN's, do some footprint recalculation for items with non integer quantities. The logic is for integer items we simply take typical footprint per package * quantity = estimated footprint whereas for float quantities we take calculate typical footprint per 100g * quantity converted to 100g = estimated footprint.

These are of course only estimates but they have proven to be feasible on average in our previous startups app.

# Detect if item is measured in kg and correct values

merged_df["footprint"]= (merged_df["quantity"]*merged_df["typical_footprint"]).round(0)

merged_df.loc[~(merged_df["quantity"] % 1 == 0),"footprint"] = merged_df["quantity"]*10*merged_df["footprint_per_100g"]

merged_df.loc[~(merged_df["quantity"] % 1 == 0),"typical_weight"] = merged_df["quantity"]*1000

merged_df.loc[~(merged_df["quantity"] % 1 == 0),"quantity"] = 1

merged_df["footprint"] = merged_df["footprint"].fillna(0)

merged_df["product"] = merged_df["product"].fillna("???")

merged_df = merged_df.drop(["index"], axis=1).dropna(subset=["description"])

# Type conversion to integers

merged_df["footprint"]=merged_df["footprint"].astype(int)

# Set standardized product descriptions

merged_df.loc[(~pd.isna(merged_df["value_from"])),"product"] = merged_df["value_from"]The resulting dataframe is sent to the frontend as a jinja template variable and then looped over and displayed as table alongside the initial image.

How it's built part 3 - Create the semantic embedding dict

This is a one time task for the dataset or after updating the mapping table - if you don't plan on modifying the dataset (i.e. for new items, different use cases i.e. home depot products instead groceries, or for translation to different languages) you can skip this step.

The code for this is stored in search_embed.py and it takes an Excel mapping table of the predefined format, appends some context (i.e. this is an item from a grocery receipt) to each item (needs to be language specific) and runs it via the LLM's semantic embedding endpoint. This task is computationally intense as it needs to call 1000s of items - therefore we only do this once and store the results json in a dictionary. The file will be quite large, over 50mb in my example.

To run this for an updated mapping excel, change the lines mentioned in the code comment and run it via pipenv run search_embed.py - for my ~1000 items this consumes about a quarter of my free Aleph Alpha credits. Make sure you don't run it more often than needed.

How it's built part 4 - Language switch

The model and dataset is optimized for German grocery receipts. I've included an English version of the dataset (Google translate based) for demonstration purposes. To use that simply change the function called from routes from analyze_receipt to analyze_receipt_en, it will use the translated excel and embedding dictionary.

Please be aware that the translations are far from perfect and I did not run as extensive tests to see how well it performs in real world scenarios.

Summary

I hope that I you guys were able to follow along, get your own version running and even got some inspiration on how the new possibilities of large language models can even be used to enable us to live more sustainably.

The GitHub Repo is public and I invite everyone to take it and continue developing from there - from my perspective all my input is now open source and public domain. Feel free to reach out if you want to continue the project or have any questions.

If you havn't checked out codesphere - hosting projects like these (and much more) has never been easier.