Exploring Options for Open-Source Multimodels in 2024

The ability of multimodels to understand several data sources like text, audio, and images enables them to understand and generate nuanced, accurate, and contextually aware responses. We explored some of the best open-source multi-models available out there.

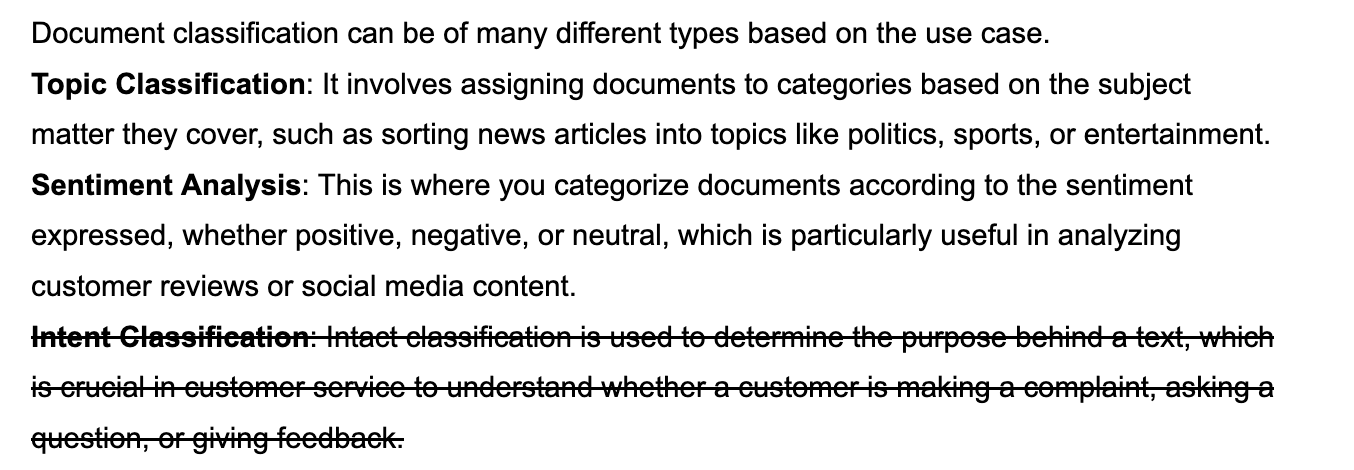

We recently started working on a project at Codesphere, which made our team push the boundaries of what is technically and practically possible. While working on the projects we ran into issues like having color-coded text, tables with numerical data, and text that was struck through.

When I started researching and experimenting, I realized the regular LLMs, no matter how efficient, lacked the capabilities required to handle such data. We found some interesting facts, like when I gave GPT a file that contained striked through text and asked it to summarize the text that was not striked through, it at first did not differentiate between the text. However, upon reinforcing that the result was wrong, I always got the correct output, which is a classic example of model routing. This is where the queries are sent to the cheaper and less compute intensive models, however, if the mistake is pointed out the query gets sent to a potentially a more resource intensive but accurate multimodal model. We also did some more test tasks, more about them later.

However, all these things lead us to the conclusion that for our use case a multimodal will be the only option. So we studied and tested different multimodels and in this article I will talk about what are some of the choices we explored for multimodals.

What is a multimodal and what can it do?

A multimodal model is an advanced type of artificial intelligence (AI) model that can process and integrate multiple forms of data, known as "modalities." These modalities can include text, images, audio, video, and even sensor data. The most interesting feature of a multimodal is its ability to understand and reason across these different types of data simultaneously. This allows it to generate more detailed and accurate outputs. For example, when analyzing a news article with an accompanying photo, a multimodal model can consider both the text and the image to provide more insightful information.

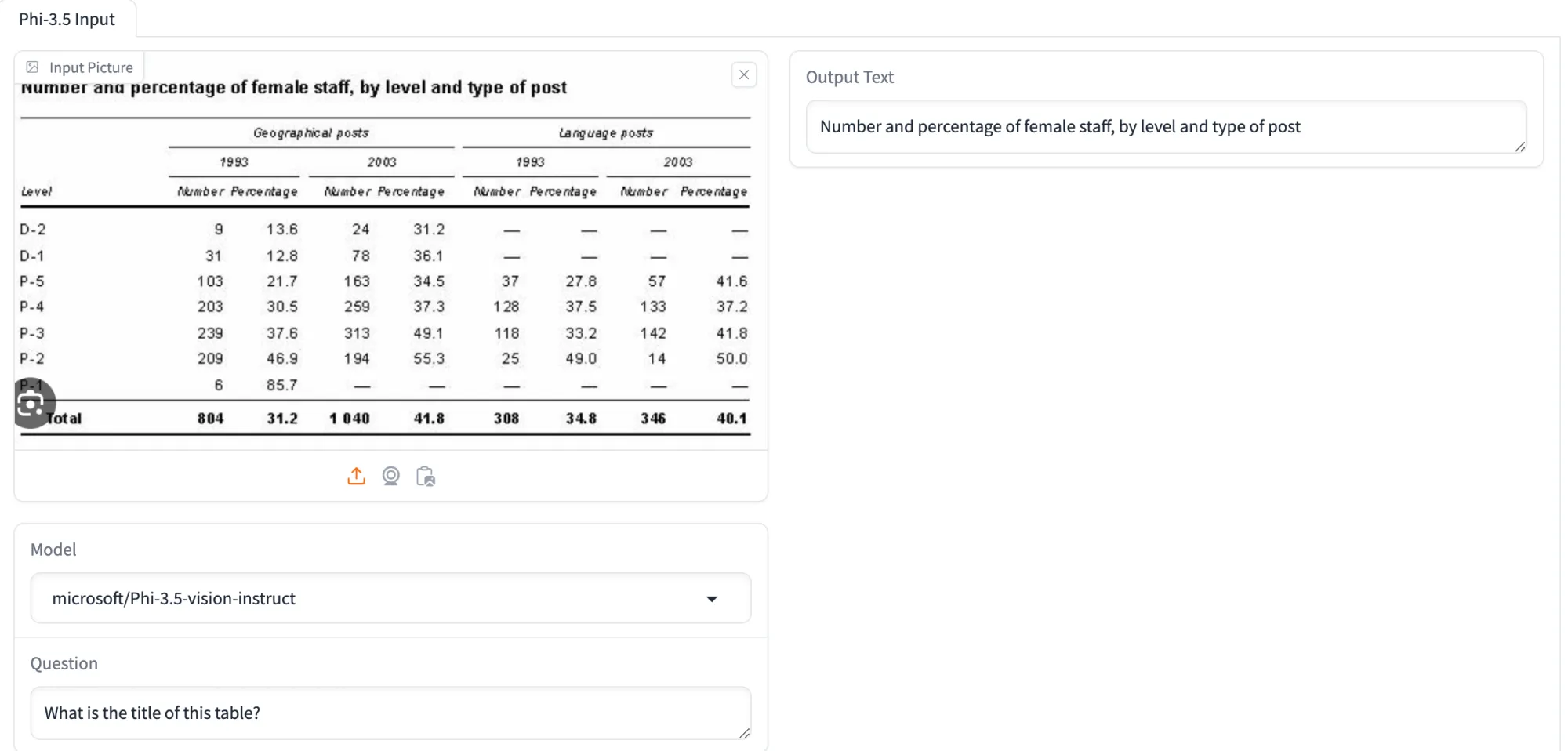

We will mostly talk about multimodals that deal with text and images at the same time. Why? Because during our experimentation we figured this was most relevant to our use cases and also most widely applicable, models like GPT work the same way. We tested GPT and some open source models for the same tasks like differentiating color coded text in tables, understanding strikethrough text, and extracting the table title etc. Both open source models and GPT performed well in extraction of titles, and simple color coded text recognition tasks but gave inaccurate results for more complex tasks. However, there are still a number of things you can do with a multimodal. Here are some of our recommendations.

Best ranked Multimodel

If you are looking for a multimodal, vlm leaderboard is the place you would find the best performing models. We tried some of the models and here are the suggestions.

1- InternVL2-Llama3-76B

This is the best rated vision text multimodal on vlm leaderboard. This model has 76B parameters making it huge size wise. It uses Llama-3-70B-Instruct as a language model and the vision model is InternViT-6B. It tops API based and proprietary models like GPT 4 with an average score of 71 and average ranking of 2.75. For reference GPT-4o has average score of 69.9 and average ranking of 4.38. InternVL 2.0 is available in different sizes with the smallest one being InternVL2-1B. Although all of these models are GPU compatible and would not run on regular CPUs. However, you can still try this model out here.

2- InternLM-XComposer2-1.8B

Most of the multimodels are too big to run on your regular laptop or CPUs. However, we found a lightweight vision-language model. Ranked 48 on the vlm leaderboard, this model has only 2B parameters. The language model used here is InternLM2-1.8B along with the vision model CLIP ViT-L/14. With an average score of 46.9, it beats comparable smaller versions like PaliGemma-3B-mix-448 and LLaVA-LLaMA-3-8B. The best thing is that you can run it using CPUs. Here is how to get do it on Codesphere.You will need to install these dependencies:

python3 -m pip install torch torchvision--index-urlhttps://download.pytorch.org/whl/cpu transformers protobufthen create a file name multimodel.py and add this code

import torch

from transformers import AutoModel, AutoTokenizer

device = torch.device("cpu")

# init model and tokenizer

model = AutoModel.from_pretrained('internlm/internlm-xcomposer2-vl-1_8b', trust_remote_code=True).to(device).eval()

tokenizer = AutoTokenizer.from_pretrained('internlm/internlm-xcomposer2-vl-1_8b', trust_remote_code=True)

query = '<ImageHere>Please describe this image in detail.'

image = './image.jpg'

with torch.cuda.amp.autocast():

response, _ = model.chat(tokenizer, query=query, image=image, history=[], do_sample=False)

print(response)

This was the image we used:

This is the output we got:

The image captures a breathtaking view of the New York City skyline, viewed from a high vantage point.

The cityscape is densely populated with numerous tall buildings, predominantly in shades of gray and brown, interspersed with a few white and blue structures.

The buildings, varying in height, create a dynamic skyline that stretches out into the distance.

The city is enveloped in a hazy mist, adding a sense of depth and mystery to the scene.

The image is taken during the day, under a cloudy sky, which casts a soft light over the city.

The overall mood of the image is serene and tranquil, reflecting the calm and orderly nature of New York City.3- Phi-3.5-vision-instruct

This is another multimodal that is kind of on the lighter side of multimodels by Microsoft. Phi-3.5-vision has 4.2B parameters and contains an image encoder, connector, projector, and Phi-3 Mini language model. The model takes both images and text as input and is best suited for prompts that use the chat format. It also features a relatively big context length of about 128K tokens. It has been recently released and so far the community has not figured out a way to run it on CPUs but it is expected soon enough. The model performs exceptionally well, especially if you want to use it for tasks that require the model to understand the structure of the image and extract text or data. If you find this model interesting, you can try it here.

Multimodal models represent a significant step forward in artificial intelligence. They enable machines to process and understand multiple types of data simultaneously, much like humans do. This makes them extremely useful in cases like ours where a simple LLM fails to deliver substantial outcomes. I hope you found this article helpful.