Deploy Ollama + Open WebUI on Codesphere

Step-by-step guide to deploy Ollama and Open WebUI on Codesphere. Learn how to set up a private, cloud-based LLM environment using the official GitHub repo.

Ollama is a high-performance runtime for running open-source large language models (LLMs) like LLaMA 3, Mistral, or Phi-4 on local or remote machines. Open WebUI adds a clean, modern browser-based interface on top, allowing anyone to chat with LLMs in real-time.

By combining the two, you get a self-hosted AI assistant that’s private, flexible, and customizable without depending on commercial APIs. Using Codesphere, you can deploy this full stack in a cloud workspace with minimal setup and no infrastructure headaches.

🚀 Step-by-Step Deployment Guide

The full Ollama + Open WebUI setup is available through a public repository on Codesphere, making it easy to deploy your own local LLM stack in the cloud.

This repo runs everything in a single workspace, automatic startup scripts, and persistent storage, making it ideal for both experimentation and production use.

Whether you're testing new models, building a private AI assistant, or running secure experiments without local hardware constraints the repo offers a powerful and frictionless starting point.

🔗 Learn how to deploy the repo step by step:

1. Create a Workspace from the Repo

- Start by creating a new workspace on Codesphere using this GitHub URL:

https://github.com/codesphere-cloud/template-Ollama-Open-WebUI.git

- Use a `Boost` or `Pro` plan to have enough storage for the LLMs.

2. Run the Prepare and Run Stages

- Go to the CI/CD tab in your Codesphere workspace. a. First, select the ‘prepare’ stage and click Run. This will:

- Copy .env.example to .env

- Set up all dependencies automatically.

b. Once it finishes, select the ‘run’ stage and click Run. This will:

- Start the Ollama server and Open WebUI together

- Download and install Open WebUI and Ollama

3. Access the Open WebUI

- Access the Open WebUI in your Codesphere workspace through the 'Open deployment' button.

⏳ During the initial setup, it may take a bit longer for the deployment to fully start. If the page doesn’t load immediately, please wait and try again.

4. Create Your Admin Account

- On first launch, you'll be prompted to create an admin account.

- Enter your email and password to complete the setup.

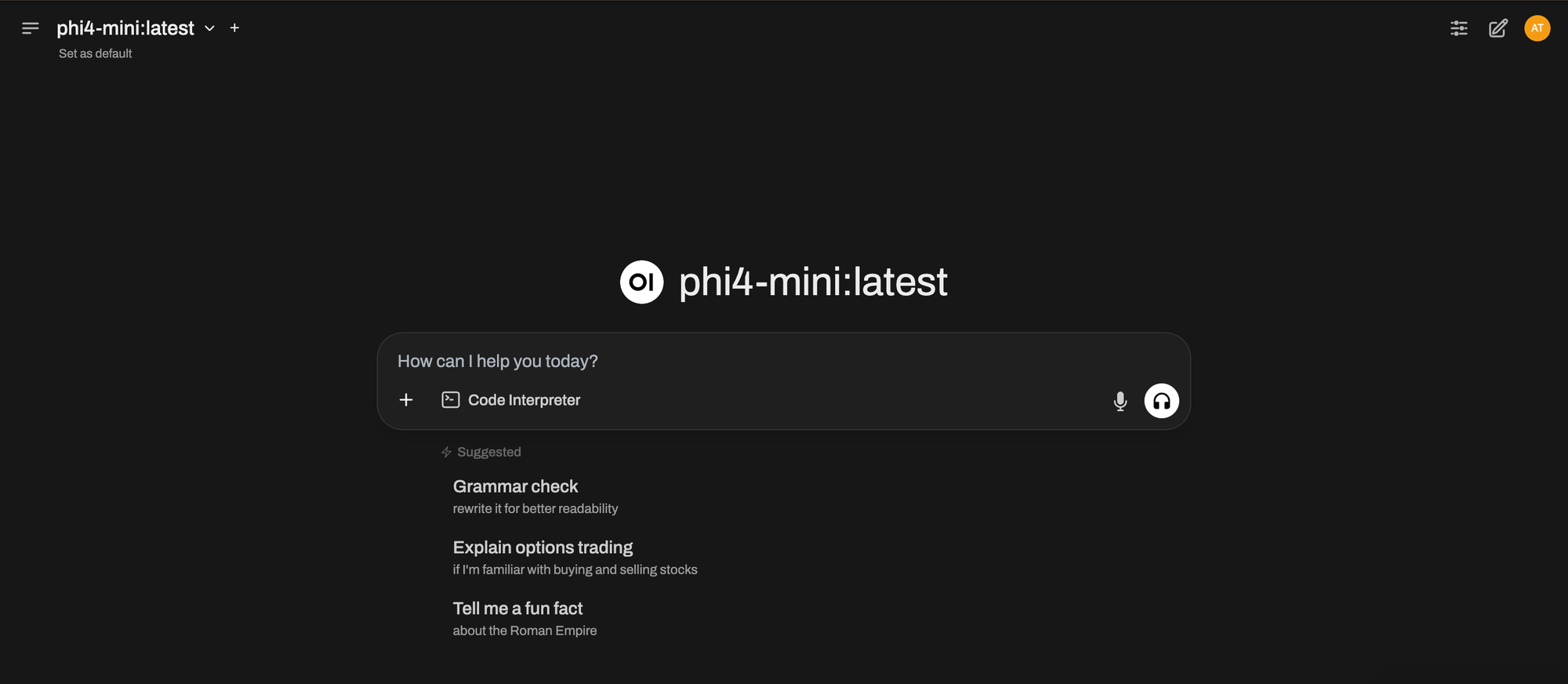

5. Select and Load Your Model

- After logging in, you'll be able to select the LLM model to use.

- By default, the workspace installs phi4-mini:latest.

- You can switch to other models anytime using the dropdown menu at the top of the interface.

- To explore more models, check out Ollama’s model library (also accessible via WebUI).

6. Start Chatting

You're now ready to use Ollama with Open WebUI, no local setup, no complex configs. Just a clean, private LLM experience running in the cloud. Interact with your model - Happy chatting!

With Ollama and Open WebUI on Codesphere, you now have a speedy, adaptive, and completely private local LLM environment, available for development, experimentation, or daily use. No Docker, no fiddly setup, just heavy-hitting models in a tidy interface.

Get on and try more models, add APIs, or customize the UI to your taste. This configuration is just the starting point of what you can create.